One of the most confusing things that new programmers face is how to break down their program into smaller pieces. Sometimes we call this architecture, but I’m not sure that gives the right feel to the process. Maybe it’s more like collecting insects…

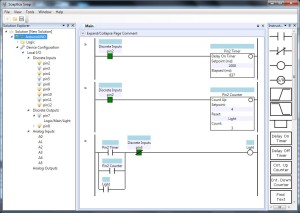

Imagine you’re looking at your ladder logic program under a microscope, and you pick out two rungs of logic at random. Now ask yourself, in the collection of rungs that is your program, do these two rungs belong close together, or further apart? How do you make that decision? Obviously we don’t just put rungs that look the same next to each other, like we would if we were entomologists, but there’s clearly some measure of what belongs together, and what doesn’t.

Somehow this is related to the concept of Cohesion from computer science. Cohesion is this nebulous measure of how well the things inside of a single software “module” fit together. That, of course, makes you wonder how they defined a software module…

There are many different ways of structuring your ladder logic. One obvious restriction is order of execution. Sometimes you must execute one rung before another rung for your program to operate correctly, and that puts a one-way restriction on the location of these two rungs, but they could still be located a long way away.

Another obvious method of grouping rungs is by the physical concept of the machine. For instance, all the rungs for starting and stopping a motor, detecting a fault with that motor (failure to start), and summarizing the condition of that motor are typically together in one module (or file/program/function block/whatever).

Still we sometimes break that rule. We might, for instance, have the motor-failed-to-start-fault in the motor program, but likely want to map this fault to an alarm on the HMI, and that alarm will be driven in some rung under the HMI alarms program, potentially a long way away from the motor program. Why did we draw the line there? Why not put the alarm definition right beside the fault definition that drives it? In most cases it’s because the alarms, by necessity, are addressed by a number (or a bit position in a bit array) and we have to make sure we don’t double-allocate an alarm number. That’s why we put all the alarms in one file in numerical order, so we can see which numbers we’ve already used, and also so that when alarm # 153 comes up on the HMI, we can quickly find that alarm bit in the PLC by scrolling down to rung 153 (if we planned it right) in that alarms program.

I just want to point out that this is only a restriction of the HMI (and communication) technology. We group alarms into bit arrays for faster communication, but with PC-based control systems we’re nearing the day when we can just configure alarms without a numbering scheme. If you could configure your HMI software to alarm when any given tag in the PLC turned on, you wouldn’t even need an alarms file, let alone the necessity of separating the alarms from the fault logic.

Within a program, how should we order our rungs? Assuming you satisfied the execution order requirement I talked about above, I usually fall back on three rules of thumb: (1) tell a story, (2) keep things that change together grouped together, and (3) separate the “why” from the “how”.

What I mean by “tell a story” is that the rungs should be organized into a coherent thought process. The reader should be able to understand what you were thinking. First we calculate the whozit #1, then the whozit #2, then the whozit #3, and then we average the 3. That’s better than mixing the summing/averaging with the calculating of individual whozits.

Point #2 (keep things that change together grouped together) is a pragmatic rule. In general it would be nice if your code was structured in a way that you only had to make changes in one place, but as we know, that’s not always the case. If you do have a situation where changing one piece of logic means you likely have to change another piece of logic, consider putting those two rungs together, as close as you can manage.

Finally, “separate the why from the how”. Sometimes the “how” is as simple as turning on an output, and in that case this rule doesn’t apply, but sometimes you run across complicated logic just to, say, send a message to another controller, or search for something in an array based on a loop (yikes). In that case, try to remove the complexity of the “how” away from the upper level flow of the “why”. Don’t interrupt the story with gory details, just put that into an appendix. Either stuff that “how” code in the bottom of the same program, or, better yet, move it into its own program or function block.

None of these are hard and fast rules, but they are generally accepted ways of managing the complexity of your program. You have to draw those lines somewhere, so give a little thought to it at first, and save your reader a boatload of confusion.