This information is for a Fanuc R30-iA, RJ3-iA or RJ3-iB controller but might work with other ones.

If you’re looking for a way to send the robot world TCP position (X, Y, Z, W, P, R) over to the PLC, it’s not actually that difficult. The robot can make the current joint and world position available in variables, and you can copy them to a group output in a background logic task. There is one caveat though: the values only update when you’re running a program. They don’t update while jogging. However, there is a work-around for this too.

First you should make sure that the feature to copy the position to variables is enabled. To get to the variables screen, use MENU, 0 (Next), 6 (System), F1 (Type), Variables.

Find this variable and set it to 1 (or True): $SCR_GRP[1].$m_pos_enb

The name of that variable is Current position from machine pulse

Now create a new robot program, and in it write the following:

GO[1:X POS]=($SCR_GRP[1].$MCH_POS_X*10)

GO[2:Y POS]=($SCR_GRP[1].$MCH_POS_Y*10)

GO[3:Z POS]=($SCR_GRP[1].$MCH_POS_Z*10)

GO[4:W ANG]=($SCR_GRP[1].$MCH_POS_W*100)

GO[5:P ANG]=($SCR_GRP[1].$MCH_POS_P*100)

GO[6:R ANG]=($SCR_GRP[1].$MCH_POS_R*100)

Note that I’ve multiplied the X, Y, and Z positions by 10, so you will have to divide by 10 in your PLC. Likewise I multiplied the W, P, and R angles by 100, so divide by 100 in the PLC.

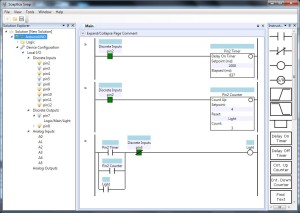

To run this program in the background, use MENU, 6 (Setup), F1 (Type), 0 (Next), BG Logic. Configure it to run your new program as a background task.

Obviously you need to send these group outputs to the PLC. Ethernet/IP is great for this, but you can use hardwired interlocks too. You need to make sure that you have enough bits to handle the full range of motion. A 16-bit integer should work pretty well for all of these. Note that the robot will happily send negative numbers to a group output as two’s complement, so make sure you map the input to the PLC as a signed 16-bit integer (a.k.a. INT in most PLCs). For the X, Y, and Z positions, a 16-bit integer will give you from +3276.7 mm to -3276.8 mm of total range. For the W, P, and R angles you’ll get +327.67 deg to -327.68 deg. For most applications this is good (remember this is TCP, not joint angles). Please check that these are suitable though.

As I said, these numbers don’t update while you’re jogging, and won’t update until the robot starts a move in a program. One little trick is to do a move to the current position at the start of your program:

PR[100:SCRATCH]=LPOS

J PR[100:SCRATCH] 10% FINE

This starts sending the position without moving the robot. In my programs I typically enter a loop waiting for an input from the PLC, and inside this loop I turn a DO bit on and off. The PLC detects this as a “ready for command” heartbeat, and as long as the PLC sees this pulsing, then it knows the program is running and the position data is valid.

Another trick you can use is to detect when the robot has been jogged:

DO[n]=$MOR_GRP[1].$jogged

The name of this variable is Robot jogged. The description from the manual is: “When set to TRUE,the robot has been jogged since the last program motion. Execution of any user program will reset the flag.”

That’s how you get the world position of the TCP into the PLC. If you just want joint angles, you can use $SCR_GRP[1].$MCH_ANG[n] as the variable, where “n” is the joint number.

Important note: The I/O will probably change asynchronously to the program scan, so what you want to do is make a copy of the X, Y, Z, W, P, R values coming into the PLC and compare the current values to the values from the last scan. If they haven’t changed, then update your actual values, otherwise throw them away because they might not be valid. If you have a fast scanning PLC and I/O then you should still be able to keep up with the robot even during a fast move. If you have a slow scan time on your PLC, then you might only get valid stable values when the robot is stopped.

Now what if you want to know what the TCP position is relative to one of your user frames? The robot controller doesn’t seem to give you access to this, but the PLC can at least calculate the X, Y, and Z positions of the TCP in your user frame itself, given the world position and the user frame parameters.

First you need to find the accurate user frame parameters. Under the normal frames screen you can only get one decimal point of accuracy, but you need the full 3 decimal points to have your numbers in the PLC match the user frame position given in the robot. You can find these accurate positions in a variable: use MENU – 0,6 (SYSTEM) – F1 (TYPE) – Variables – $MNUFRAME[1,9] – F2 (DETAIL). The second index in square bracket is the frame number, so $MNUFRAME[1,1] is frame one and $MNUFRAME[1,2] is frame 2. Copy these numbers down exactly.

Here’s the math for calculating the TCP relative to your user frame. All variables are LREAL (which is a 64-bit floating point variable). I don’t know if you can use a regular 32-bit float or not. Result is your TCP in user frame. Point is your point in world frame (from the robot) and Frame is the accurate user frame data you copied from the $MNUFRAME[] variable.

Result.X_mm := Point.X_mm - Frame.X_mm;

Result.Y_mm := Point.Y_mm - Frame.Y_mm;

Result.Z_mm := Point.Z_mm - Frame.Z_mm;

RadiansW := DegreesToRadians(-Frame.W_deg);

CosOfAngleW := COS(RadiansW);

SinOfAngleW := SIN(RadiansW);

RadiansP := DegreesToRadians(-Frame.P_deg);

CosOfAngleP := COS(RadiansP);

SinOfAngleP := SIN(RadiansP);

RadiansR := DegreesToRadians(-Frame.R_deg);

CosOfAngleR := COS(RadiansR);

SinOfAngleR := SIN(RadiansR);

// Fanuc applies rotations WPR as W (around Z), P (around Y), R (around X)

// AROUND Z

temp := Result.X_mm;

Result.X_mm := Result.X_mm * CosOfAngleR - Result.Y_mm * SinOfAngleR;

Result.Y_mm := Result.Y_mm * CosOfAngleR + temp * SinOfAngleR;

// AROUND Y

temp := Result.Z_mm;

Result.Z_mm := Result.Z_mm * CosOfAngleP - Result.X_mm * SinOfAngleP;

Result.X_mm := Result.X_mm * CosOfAngleP + temp * SinOfAngleP;

// AROUND X

temp := Result.Y_mm;

Result.Y_mm := Result.Y_mm * CosOfAngleW - Result.Z_mm * SinOfAngleW;

Result.Z_mm := Result.Z_mm * CosOfAngleW + temp * SinOfAngleW;

Note that DegreesToRadians() is just PI*deg/180.

Run that on your PLC and check that the values in your Result variable match the user frame TCP position reported on the teach pendant.

I haven’t gotten around to calculating the W, P, and R angles of the TCP in user frame yet. Currently I just look at W, P, and R in world frame if I need to know if I’m “pointed at” something. If you get the math to work for W, P, and R, I’d really appreciate if you could share it.