Every once in a while I’m talking with someone and they open up about their beliefs. I’m pretty sure this is a normal thing humans do with each other, but it always catches me off guard.

Sometimes their beliefs are strongly at odds with my worldview, like they might say, “You know, I really think the moon landings had to be faked.” I always react immediately, and it doesn’t appear to come from the thinking part of my brain. No, the first thing that happens, completely automatically, is an emotional reaction. I know because I can feel my face turn red.

It only lasts a few seconds. I automatically take a deep breath. The thinking part of my brain starts working again. “Remember,” I tell myself, “there’s always a chance they have information you haven’t heard yet.”

I ask, “Well, how do you know? What convinced you?”

“You know, I’ve been watching this guy on YouTube and he just makes a lot of sense,” they say.

The Rational Animal?

These people I talk with aren’t dumb. They’re logical, thoughtful people. In fact they can’t do their jobs without being ruthlessly analytical.

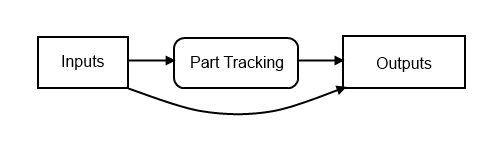

I often have to diagnose a problem with a machine, and it’s clearly a problem in the I/O network. Whether it’s DeviceNET, Ethernet/IP or EtherCAT, we’re often dealing with a daisy-chain configuration. You open up your diagnostic tool and you can see that nodes A, B, and C are online, but nodes D and E aren’t. Clearly the problem is between nodes C and D.

This where I say, “well, it can only be one of three things: the transmitter in node C, the communication cable, or the receiver in node D.” I usually check the communication cable first, not because it’s the most likely, but because it’s the easiest to check by simply grabbing my 100 foot cable and temporarily replacing the existing cable. Same problem? It’s not the cable. Now it’s either the transmitter or the receiver.

Now I can try bypassing the receiver… if I connect node C directly to node E, and node E communicates, then I know the problem is node D. Problem #1 solved. Replace node D, pack up the tools, and problem #2 gets a promotion.

Why does logical thought come so easily in this case, but completely elude us in other cases?

Bias?

There’s one very important distinction between these cases. When I’m diagnosing the I/O network on a machine, I don’t have a vested interest in whether it’s the transmitter, cable, or receiver that’s faulty. I’m an unbiased judge. I don’t identify with any of them.

That isn’t always true. If I’m working on the same machine with a co-worker, and they designed the transmitter, and I designed the receiver, then I really wouldn’t want the receiver to be the problem, would I? What would happen if it was the receiver? Would I feel embarrassed in front of my team? Would I lose standing within the group? Probably not where I work, but it’s conceivable. In some places it might be quite likely.

One thing’s for sure… our impartiality is in question.

Perhaps more importantly, the machine doesn’t have an agenda. It’s not actively trying to influence you, like a person is. To diagnose a machine, all you need is logic. To listen to an argument, you need to think critically.

Fight? Flight?

Our bodies and our minds are finely tuned to threats in our environment. When something threatening happens, our bodies initiate a stress response (also called the fight-or-flight response) by releasing hormones that get us ready to deal with threats. It happens when a deer jumps out in front of your vehicle at night. You react quickly and automatically, but afterward you can feel the physical changes. Your heart is racing, your senses are more sensitive, and your reactions are faster.

How does the body improve your reaction time? Less thinking.

Oh, later it may feel like you saw the deer jump out, you hit the brakes and swerved out of the way, all because you decided to do it. But you didn’t decide anything. You reacted, and the thinking part of your brain couldn’t possibly have worked fast enough to be a part of that process.

How does the automatic part of our brain know what to do? I’m not sure. I’ve often wondered if that’s what our dreams are: our brains simulating stressful situations, letting the thinking part do it’s thing, and recording the result for future automatic playback in a stressful situation. Of course, I have no evidence of that, but it’s fun to speculate.

You see, it’s not just deer jumping out at us that causes stress. Many of the most common bad dreams are about social embarrassments, like showing up naked in an inappropriate place, or showing up late to a meeting.

Not only are we finely tuned to physical threats, but also to threats to our social status. We’re very sensitive to anyone thinking badly about us, or thinking badly about a group we identify with. Nobody wants to be voted off the island. It isn’t just that it makes us feel bad… it’s that it causes our body to prepare for a fight. It inhibits the thinking part of our brain.

It’s not about the Moon Landing

So when someone tells me they think the moon landings were faked, my body somehow sensed a threat and reacted. Why?

It doesn’t make sense to be upset because someone is wrong. We’re wrong all the time. Disagreements always involve at least one person being wrong, but disagreements lead to learning. I think we should have a right to say wrong statements (just don’t be shouting “Fire!” in a crowded theater).

I didn’t react because I thought they were wrong. The reaction was almost instant, so I wasn’t thinking anything. Any disagreement causes the reaction, because any disagreement is a potential threat.

It may not seem like it, but we go out of our way to avoid disagreements, particularly with the people we interact with directly. In fact we tend to align our views with our social circle. People measurably change their political views when they move to a new place. Some people will give an answer they know to be wrong just to conform.

Winning Friends? Influencing People?

I really am curious. I’m fascinated by how stuff works, whether it’s stars and planets, subatomic particles, machines, or even people. I really want to know the truth, and I don’t actually have a reason for wanting to know. I’m just curious. But I’m also comfortable with the answer, “I don’t know.”

I think we all tend to assume everyone else is like us, and I was no different. I assumed everyone else was just curious like me. I now see that’s clearly not true. We’re hard-wired to care about belonging in a group. Belonging is comfortable. It reduces stress. We crave it. There’s safety in numbers.

A few years ago I was at a dinner hosted by a local startup incubator and maker space. Not big internet startups, just local people starting small businesses. In front of us, there was a young woman who was growing a business that sold dietary supplements and provided nutritional advice and that sort of thing. I asked her how it was going, and she said, in a sort of defeated tone, “I’ve learned that telling people what they need to hear doesn’t work. I just tell them what they want to hear.”

It’s funny what we remember. I don’t think I’ll ever forget that short conversation.

This Guy on YouTube

“You know, I’ve been watching this guy on YouTube and he just makes a lot of sense.”

Partly this is a problem with videos. When we read words on a page, we have more time to reflect, to think critically. We can read at our own pace. Videos don’t work like that.

But really, it’s pretty easy to convince you something if believing it makes you feel good. Every rock band walks out on stage and tells the crowd how awesome their city is. The audience always agrees, but let’s face it… some of those places have to suck.

Also, since we’re so finely tuned to threats, it’s actually pretty easy to convince you something if believing it makes you feel threatened. We’re tuned to threats because over-reacting is a better survival strategy than under-reacting. It’s better to err on the side of caution and assume there might be a lion hiding in that tall grass. If you’re wrong you got some more exercise, and if you’re right, it saved your life.

Thinking critically is hard. Like… really hard. We’re not built to do it.

If you want to start thinking critically about a video, or an article, you need to figure out who the author is, and what their motivation is. It’s rarely curiosity. It’s usually money or status. People post videos to YouTube to make money, and they do that by getting more and more people to watch their videos. They don’t need to post factually accurate information. They just need to post something people will share. Popular videos just say what people want to hear. They appeal to emotion, not logic.

But if you really want to be a critical thinker, it’s much harder than that. Before you watch that video or read that article, you need to stop and ask yourself, “What do I want them to say?” Because unless you’re watching a video or reading an article about a topic that means nothing to you (doubtful), then you’re biased, and you need to be honest with yourself about your own biases before you can worry about theirs.

That’s hard.