Imagine a machine with some number of pumps. We have the logic for each pump in its own routine: Pump1, Pump2, etc.

Somewhere in our program we want to know if any of the pumps are running. You write a rung of logic like this:

This machine gets changed a lot and the number of pumps changes frequently. Any time we add or remove a pump we need to remember to revisit this rung and modify it. By definition, this rung is separate from the Pump logic (it comes after it).

If we remove Pump2 and remove the tags or variables associated with Pump2, then hopefully the editor will be smart enough to tell us that we have a contact referencing a tag or variable that no longer exists.

But what if we’re adding a pump? When we add Pump4, what prompts us to revisit this rung? What if I’m not even familiar with this code because it’s been a long time, or I wasn’t the original programmer who wrote it? Maybe it’s not pumps, but steps, or missions, or so on. There could be hundreds. The fact is this is a very common problem that pops up often in ladder logic programming, and we just live with it. We shouldn’t. We should ask our PLC vendors for better tools.

Now, there are hacky ways to solve this problem to make sure that when I copy a pump routine that I don’t have to remember to go update another routine.

For one, I could put all the Pump Running bits in an array from 1 to a maximum number of pumps, and replace my pleasant little Any Pump Running rung with a FOR loop. That only works if my pumps are numbered sequentially of course, and it’s really ugly and makes it difficult to look at the AnyPumpRunning coil and follow it back to see which pump is running. But I could do it. If I had no self respect.

Another hacky solution is to use a Reset (unlatch) instruction on a coil at the beginning of the scan, before any of the pump logic… let’s call it “tempAnyPumpRunning”. Then in parallel with each Pump Running coil we could use a Set (latch) instruction on the tempAnyPumpRunning coil. Finally, after all the pump logic you could use the tempAnyPumpRunning coil to drive the real AnyPumpRunning coil. I mean that would work. It’s less ugly than a FOR loop, but still a bit ugly, and suffers from the same cross referencing problem as the FOR loop. I’m embarrassed to say I’ve done this. It turns out I have no self respect.

Each time I did it, I cursed ladder logic for not being expressive enough.

I’d like to pause for a moment and talk about how this problem is solved in a programming language with any kind of functional programming features. I’ll use C# as an example, because I’m familiar with it. In C#, if you can have a collection of objects, let’s say of type Pump, and each pump has a property called Running, and if you want to know if any of them are running, you can do this:

bool anyPumpRunning = pumps.Any(p => p.Running);

The expression in the brackets, p => p.Running is a function with one input (Pump p) that returns a boolean, and the .Any(...) extension method evaluates this function on every object in the pumps collection and if any one of them returns true, then the .Any(...) function returns true. Even if we change the number of pumps, this line of code never has to change.

Similarly, C# has many such useful features:

bool allPumpsRunning = pumps.All(p => p.Running);

int howManyPumpsRunning = pumps.Count(p => p.Running);

double totalLitersPumped = pumps.Sum(p => p.LitersPumped);

double maxPumpRuntime = pumps.Max(p => p.Runtime);

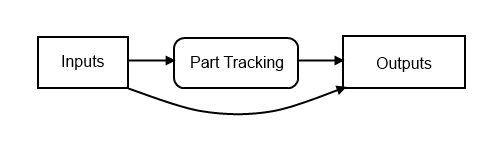

In the PLC world, we presumably created a UDT or a structure called Pump, and we created variables or tags of type Pump called Pump1, Pump2, etc. The PLC knows where all these variables are located in memory (or it could). It should be possible to create new PLC instructions that act on all variables of a given type, like for Any Pump Running:

You can get close to this now. You could create an AOI (or custom function block) but it would have to take an array of pumps as a parameter, and use a FOR loop inside to evaluate it.

The AOI solution still has a cross referencing problem. It’s not obvious which pumps are on. But the new instruction I described above could have a feature where you click a button and it pops up a cross reference of all the Pump Running bits, sorted by which ones are on, and double-clicking could take you directly to where that coil was being set. It would be EPIC!

It’s just a thought. Take it or leave it.